What Does the Data Say?

You may be wondering what AI, a sermon from Sarah Jakes Roberts, and a move to London all have to do with self-preservation. Allow me to make it make sense.

Self-preservation: the protection of oneself from harm or death, especially regarded as a basic instinct in human beings and animals.

Happy Wednesday beautiful people!

Excuse my longer-than-expected absence- but I have officially moved across the pond to London! I’ll be sharing more details of my experiences soon, but in short, the last 3 weeks have been amazing. I have built quite the community (much quicker than I anticipated) and between getting settled in, running around town with them, and diving into new curiosities, I have neglected my writing. But I’m back! So let’s get to it.

Last weekend I came across the sermon, A Bold Move, where Pastor Sarah Jakes Roberts talked about self-preservation, and there were two lines that stuck with me:

Self-preservation is a method, not the mission.

Not every self is worth preserving.

While both points are super potent and deserve time to marinate individually, I was challenged to know what this meant for me. I started questioning personally if there were certain versions of myself I was holding on to, especially seeing how I was embarking on my newest adventure to London. I felt a heightened sense that maybe I had succumbed to this subconsciously because what better way to feel safe in a new environment than to cling to what’s familiar?

In order to understand why I kept and am keeping certain versions of myself, even if they no longer served me, I had to dig deeper into the history of my own experiences and form a fact pattern of why I believed certain things I did/do.

WHAT DOES THE DATA SAY?

Oddly enough, this is where I began to see parallels in what Dr. Joy Buolamwini mentioned in her Netflix documentary, Coded Bias, where she talked about how important data truly is. Dr. Joy is a computer scientist at MIT, the Founder of the Algorithmic Justice League, and a leading researcher in algorithm discrimination within artificial intelligence.

For many of you, chat gpt is the first time artificial intelligence has landed on your radar; but AI has been around for some time and is embedded in much of our daily lives. By definition, artificial intelligence leverages computers and machines to mimic the problem-solving and decision-making capabilities of the human mind. Examples of this would be self-driving cars, facial recognition, recommended videos on youtube or Instagram, chatbots, etc.

A quick side story…

Headquartered here in London is the artificial intelligence arm of Alphabet, DeepMind. I tried pulling up to the office last week hoping I could get a tour (LOL, it was definitely a far shot but I was hoping some of the ease I’ve been having in life would somehow show itself in this going my way) and obviously the answer was no. If y’all could’ve seen the way they looked at me 😂. All that to say if anybody has a Deepmind plug, let ya girl know!

Back to the story…

Dr. Joy says, “data is what we’re using to teach machines how to learn different types of patterns. AI is based on data and data is a reflection of our history. So the past dwells within our algorithms.”

As we’re currently experiencing, biases in algorithms are nothing new. We see the issues and implications clearly with social media and search algorithms. AI bias occurs because humans choose the data that algorithms use, and also decide how the results of those algorithms will be applied. We know the people feeding and re-enforcing the machine’s data typically don’t look like us, so unfortunately it’s no surprise when these shortcomings occur.

However, the problem is that much bigger within AI simply because it has the ability to govern our liberties as a society. An example of this would be facial recognition. What we know to be true is that facial recognition AI often misidentifies people of color.

The U.K. is coming under a lot of heat because they now actively use live facial recognition as a means to “combat crimes and terrorism,” even though an independent review of the Metropolitan Police public trials showed only a 19% success rate in its technical ability to correctly match photos to people.

In the U.S., there are already multiple stories being told about faulty facial recognition software. A black man in Detroit was locked up for 30 hours due to “the computer getting it wrong” and another black man just this Thanksgiving spent almost a week in jail due to incorrect facial recognition.

China is the world leader when it comes to facial recognition technology and it’s far too much to put into a single bullet, so I strongly suggest y’all just watch this video here.

If /when law enforcement decides to commit fully to only using facial recognition tools, this bias could lead to the continuation of wrongful arrests or in some cases even death for some people of color.

I don’t say this to scare you, but to show you how powerful incorrect or missing data can be. A potential future of life or death.

Is that not what self-preservation is though? Using data to make decisions that govern the liberties we give to ourselves as individuals?

It’s our mind (artificial intelligence), using our lived experiences (the data), to create a fact pattern that then dictates or “validates” the decisions that we think will keep us safe.

Because we are human and have our own unconscious and conscious biases, skewed views and opinions can create faulty data points, which through second-order consequences create bad algorithms that WE then operate our daily lives from… which can for the sake of my analogy, create bad artificial intelligence.

So the better question here is how biased is your life’s algorithm. What data are you using to inform and train your brain?

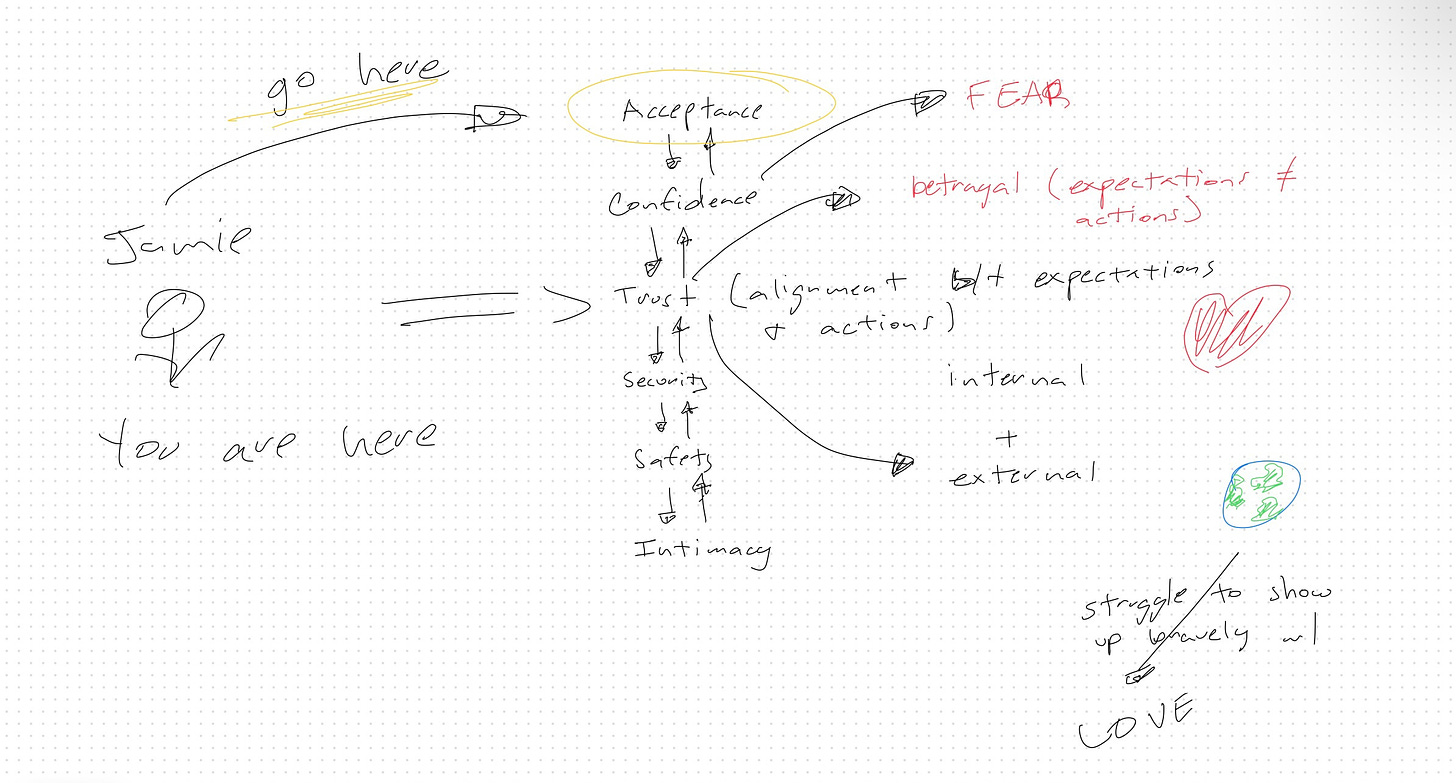

After further thought, I realized a lot of unresolved emotions from betrayals of old relationships heavily skewed my data, causing me to be timid when it came to making bonds with new people.

Seeing how I had just moved to a brand new country and only knew two people, the problem was evident. Over the last couple of weeks, I have been continually adding better quality data by working to heal from deep wounds through acknowledgment and acceptance. The hurt self is the self I am working to currently release because as Sarah Jakes Roberts said, every self is not worth preserving.

The better the data, the better the intelligence.

With love always,

Jamie ✈️ 🌎

Side note: I’d also like to thank my friends for pulling out their notepad and sitting with me on FaceTime for 3 hours to draw what I was articulating. I’ve added it here so y’all can see how I’m personally processing and creating better data points for myself. Excuse their chicken scratch, I was talking fast 😂

Questions I’m thinking through:

What versions of self am I holding onto that no longer serve me?

What data am I using to inform my decision-making?

How can I create the best form of AI for myself

Links I’m loving:

Documentary: Coded Bias

Sermon: A Bold Move

Article: The Problem with Biased AI’s